Yes, I *Really Would* Sacrifice Myself For 10^100 Shrimp

Morality as performance vs morality as "damnit I guess I'll do the right thing"

Dylan made a post titled The Utilitarians are Gaslighting You that made this claim:

If it was your own life that must be sacrificed to save 10^100 shrimp, would you volunteer? If it was the life of your partner, or your mother, or your child that was required, would you sign the waiver?

My premise is, again: “obviously not.”

My response: Yes, I absolutely would, in a heartbeat.

Don’t get me wrong, I’m not Mother Teresa. If God descends from the heavens and offers me the choice, I wouldn’t be overjoyed to sacrifice myself for a bunch of weird alien crustaceans. I would certainly hope that it was a trap, or untrue, or there was another way, and I would sign the waiver resigned and after asking God a bunch of questions, probably including “hey bro, seems like you’re omnipotent or at least potent enough to save the shrimp and also save me and my family, what’s the deal dude.” I would also wish I was doubly sure that shrimp are conscious, even though the best evidence says they almost certainly are; and I would definitely want to know what “saved” means. If saved means they get to live a terrible existence crammed into a 10^100 inch tank with all 10^100 other shrimp, then I ain’t doing squat. But if the shrimp are gonna live pretty good lives after they’re saved, or if I’m saving them from being in lots of pain…

Well, I would do it. In fact, I think the stakes are so lopsided I would probably sign the waiver, resigned in sacrifice, even if the entire population of Earth was at stake.

I want to explain both why I would do this and why I think this type of asking about what you would really do in a situation, instead of what you should do, is the opposite of my conception of morality.

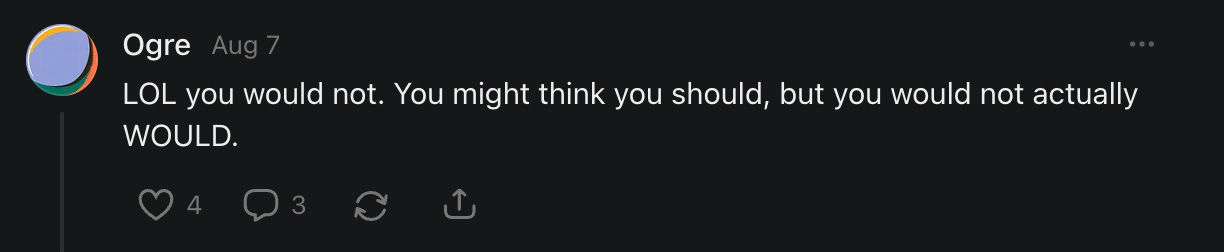

First, something strange happened when I posted a note saying I would:

Is it really that hard to believe I’d sign a waiver that gives me instant death in exchange for universes and universes of good lives to thrive? The resistance to accepting that this is something that I would do seem really weird, when it’s not even a hard task to do — I’m signing a paper and then dying. Maybe if they said “you have to run down 100 strangers and kill them with no weapons except two kitchen ladles for 10^100 shrimp,” then it would be a really difficult task to do (even though you should still desperately try). In this case, you could say “Hah! I think you’re too much of a coward” and pwn me in the marketplace of ideas. But no, I literally have to sign a paper — doesn’t require much executive override in the thinking brain to do that.

So what’s the deal with the doubt? I think the resistance here is a resistance bourne out of disbelief — “surely you don’t actually care about what a bunch of shrimp lives are like? Surely this is a performance?”

And I want to stress first that I don’t really feel any empathy for shrimp! They’re weird creatures; evolution didn’t imbue me with any empathy for them in the way that they did for my friends and family. But throughout society’s existence, after the war and bloodshed, we’ve accepted that the unempathetic are people too, with real lives lived. We’ve accepted that the foreign countries are not backward savages, but instead real people living real lives, even if they don’t tug at our heartstrings. If someone is living a life, and they’re in pain, I want to stop it and have them live a good life, even if they’re just some random person on the far corners of the world.

At the heart of caring about foreigners, animals, and yes, even shrimp, is the crazy notion that if there’s a real someone experiencing deep pain, they matter. Even if they don’t look exactly like you.

And now I get into more boring mathy side: 10^100? Are you fucking crazy? 10^100????? This is the real reason why this waiver is so goddamn easy to sign. If I ask you to either sacrifice yourself, or let the ENTIRE EARTH except yourself die, or maybe be tortured for a couple hundred years, I would hope that you would think “gee, I like my life but maybe other people matter”. This hypothetical for you is exactly how easy it is for me to sign the waiver for the shrimp, because I think numbers matter. The only way I can get you to understand just how deeply numbers matter is by invoking money, because that’s the only place where scope insensitivity doesn’t reign: If I asked you to do a jumping jack for 1 cent, you probably don’t care, but if I ask you to do a jumping jack for 10 billion dollars, you might suddenly be willing to do a couple jumping jacks, or maybe even some stuff that you wouldn’t ordinarily like to do1.

At the heart of utilitarianism is the crazy notion that if there is 100 times more bad stuff, then it matters 100x as much. 100 people in pain is 100 times worse than 1 person in pain. 10^100 people in pain is 100 times worse than 10^98 people in pain. If you have any hesitation at all to crushing a shrimp, to watching a living being’s legs writhe around as it dies, if you think the suffering of a conscious being is bad in literally any way — then if I amplify that badness 10^100 times, it will swamp all you’ve ever cared about in the entire world2. That’s just math; big number = big.

If I would sacrifice 1 person for 10^90 shrimp, then mathematically, I should be willing to sacrifice the entire Earth for 10^100 shrimp. That’s literally just doing the same multiplication to both sides of the equation; guys, you learn that in 6th grade.

But isn’t all of this stupid? At the core of this is the idea that to think something is moral, you have to be willing to do it. Which I believe is totally missing the point. Let me illustrate this with Dylan’s3 other point in the article.

A local hospital has 6 patients: five young adults dying of organ failure but otherwise healthy, and one healthy minor there with his parents for a routine checkup. It is possible to save all 5 of the ill patients by transplanting the minor’s organs, killing him in the process. The procedure is legal as long as consent is obtained, but as the healthy patient is a minor it is the signatures of his parents that is required. The surgeon briefs them on the situation, and, as a utilitarian, he explains the ethical decision would be to sacrifice their child for the greater good.

Are there any utilitarians in this scenario, in the role of the parents, who would accept the surgeon’s argument and agree to trade the life of their child for the lives of 5 strangers?

My answer to what I would actually do in this situation: Say hell no. You kidding me? I love my friends and family more than anything else in the world, no way am I gonna sacrifice them for some random nerds.

But I don’t have kids, so let’s talk about what I would do if I was the one being asked. If the doctors said “hey, you can give your organs for the five,” you know what I would say?

Hell no. You kidding me? I love myself more than almost anything else in the world, no way am I gonna sacrifice myself for some random nerds.

But here’s the stress point: I think refusing to sacrifice the few to save many is not the moral choice. I think when I value myself over the welfare of many other people, I am being selfish. I would not turn to Gandhi or Martin Luther King Jr. and say “you did not make the moral decision; why did you sacrifice so much for all of those other people you weren’t primed evolutionarily to care about? Idiots.” I think my unwillingness to make a good choice says nothing about how good the choice actually is.

And there’s an easy out for a utilitarian to say “oh well doing this once sets a precedent, so a doctor should never do this blah blah blah” and that’s true, in the real world you should never do this, but it’s missing the point: the utilitarian says the moral choice is indeed to violate some abstract, human idea of “rights” in favor of making the world a better place in a real way. It calls the abstract virtues we wrest with in our head hogwash, and says what matters is the emotions and fulfillment of the conscious beings who exist, not some purity of ideals. It says that humans are stuck in their own head, with their silly social conventions and tribes — It says that people are people, and an ideal will never matter more than a person.

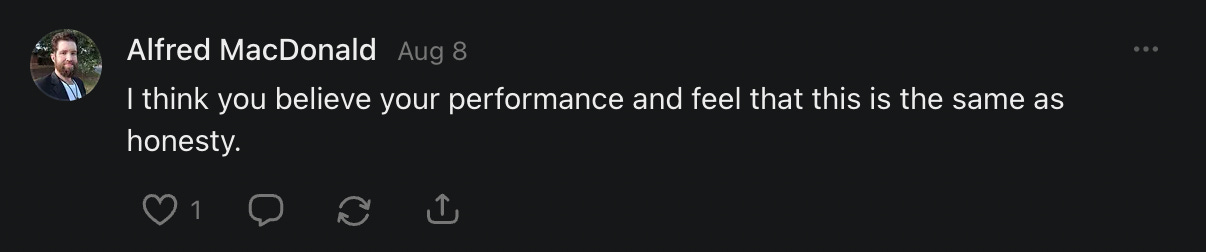

By the way, I asked Dylan if he would sacrifice himself to save the entire Earth, and this was his response:

This gets my conception of morality so wrong that I wonder if the word “morality” is a bad word for the situation.

To be clear: When I say morality, I am talking about selflessness. I am talking about caring about other people, grounded in the assumption that other people are real, conscious individuals who exist. I am not looking for an evolutionary justification for why you personally wouldn’t care about other people. I am not looking for a list of ways to justify that you, personally, feel like a good person. I don’t really care if you feel like a good person while killing billions!

I’m not religious, but fuck it, when I think of morality I think of Jesus. Love thy neighbor as thyself. Compassion to the enemy; even those who have cast you aside are worthy of love. Caring about lepers and the “untouchables” when no one else would. Morality is not utilized to justify why those who crucified Jesus probably made the right call, since they evolutionarily love their friends and family more than the vicious, weird outsiders they crucify. If you can call making the world a worse place for selfish reasons on purpose morality, then I will never use the word morality again; that’s not what I’m talking about.

I would hope that morality in the Grand Christian Tale is used to say that it didn’t matter if everyone hated Jesus because he was doing good.

The truth is that I’m not friends with the entire world. I’m friends with my friends! Their ‘good’ is what I care about most. If that were not the case and all ‘good’ to every person meant the same to me, as alleged by Utilitarianism, then to be my friend would mean nothing at all.

We can now see exactly where this closer by Dylan goes wrong: Morality doesn’t ask “what personally do you care about,” it asks, “how can make the world a better place”. And the world does not privilege your friends; you do! Love and care for them as if they were your family. It’s what we cling to in this world.

But no, the moral decision is not to sacrifice the Earth for yourself. Be like Jesus or whatever. The unloved matter too.

Subscribe and uhhhhh like as well. Also, if you enjoyed this post you might like this one too:

Is All of Human Progress For Nothing?

Are humans happier today than they were 10,000 years ago?

I don’t care about stuff. Cathedrals, medicine, beds, stadiums, cities, whatever. I care about people. The conscious beings whose reflections of reality mirrored in the mind are the only thing that can ever be felt in this world. Does the stuff make people’s lives better. Are they more fulfil...

I’ll suck someone’s dick for 10 billion dollars if anyone makes the offer. Gonna need to see that in writing and I would need to be pretty confident that I’m gonna get the money but I’ll do it goddamn it. Moneyyyyyy. I’ll suck someone’s dick for 10^100 shrimp too.

Though the principle is true, sacrificing the earth is a little different than one person, because you’d also be killing all FUTURE humans, so if the shrimp are all gonna die and don’t have a future then it’s a bad idea to sacrifice the earth, especially if we keep growing exponentially and maybe use our brains to have incredible lives in the future — BUT the principle is true, because if there were 2 earths I’d be more than willing to sacrifice 1, just the specifics of wiping out a smart species matters. Also, if you change it to 10^10000, all of these opinions becomes moot, so just make number bigger

By the way, Dylan is a cool guy with lots of good essays, about culture, science, and philosophy. I encourage you to read some of them! I would complement him in the actual essay but it would mess up my flow. Sorry, Dylan, you gotta understand — the flow of the article Dylan. The flow. Think of the flow. You’ll have to live with this extended footnote.

I think we have to keep in mind the degree to which people not knowing what 10^100 looks like plays a role in their reacting in complete disbelief. Yeah, I could imagine someone deciding to sacrifice themselves on behalf of more beings than there are protons in the observable universe.

Nice post!

My response fwiw...

https://davidschulmannn.substack.com/p/my-theory-of-morality